ChatBCG: Can AI Read Your Slide Deck?

Authors: Nikita Singh, Rob Balian, Lukas Martinelli

Abstract

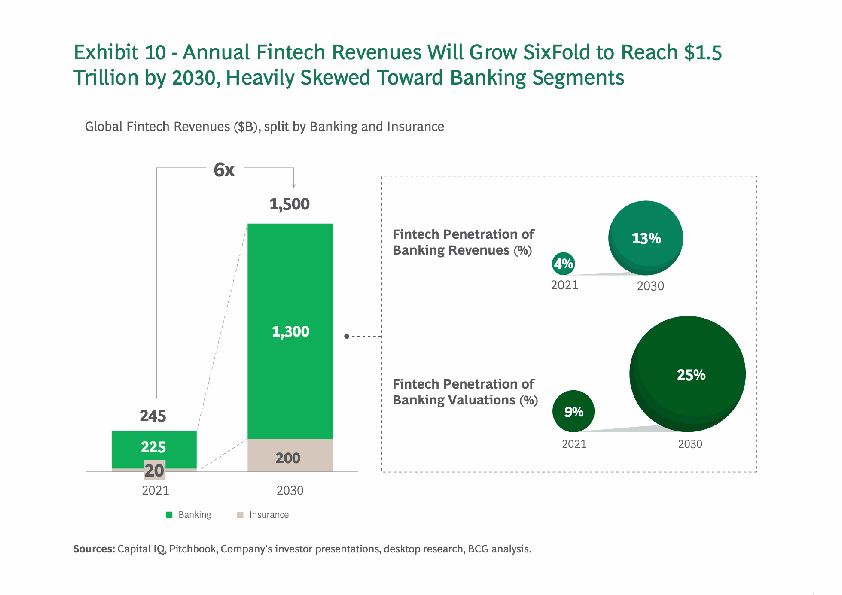

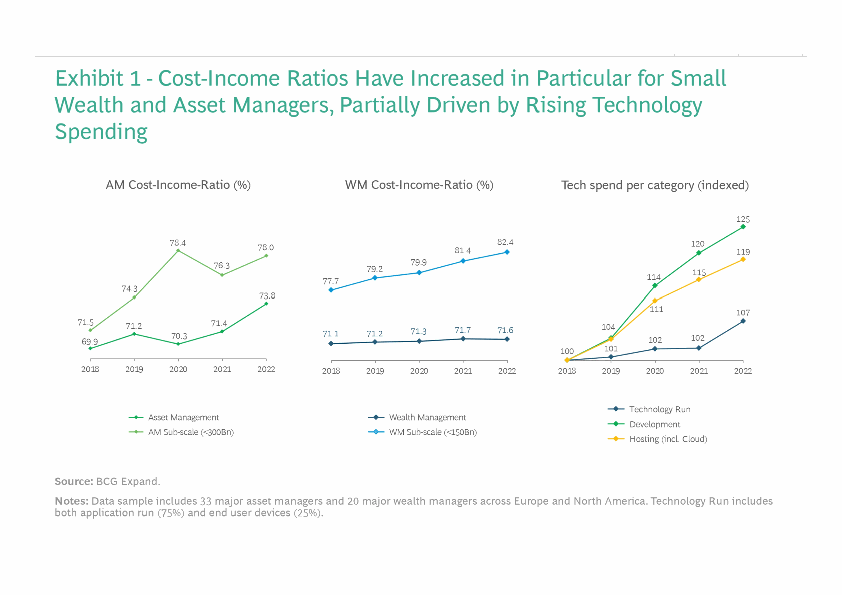

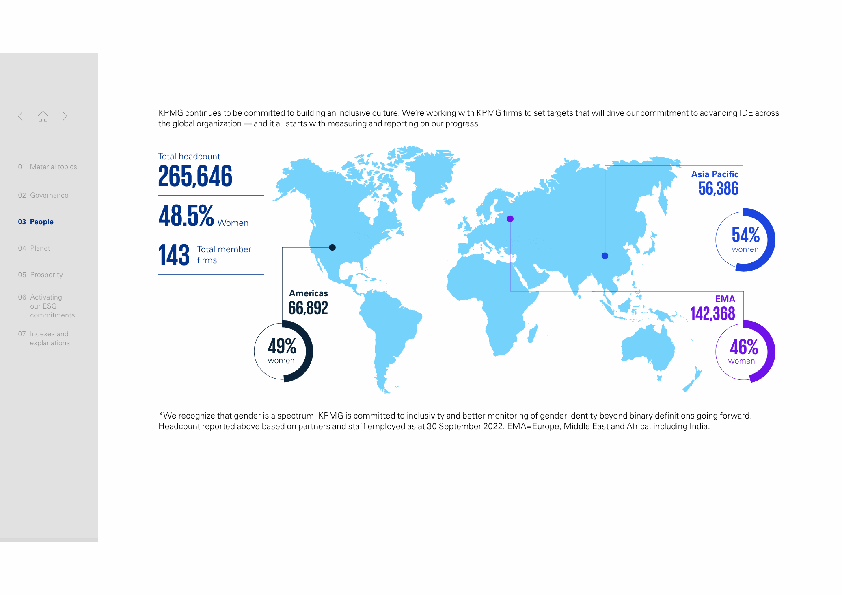

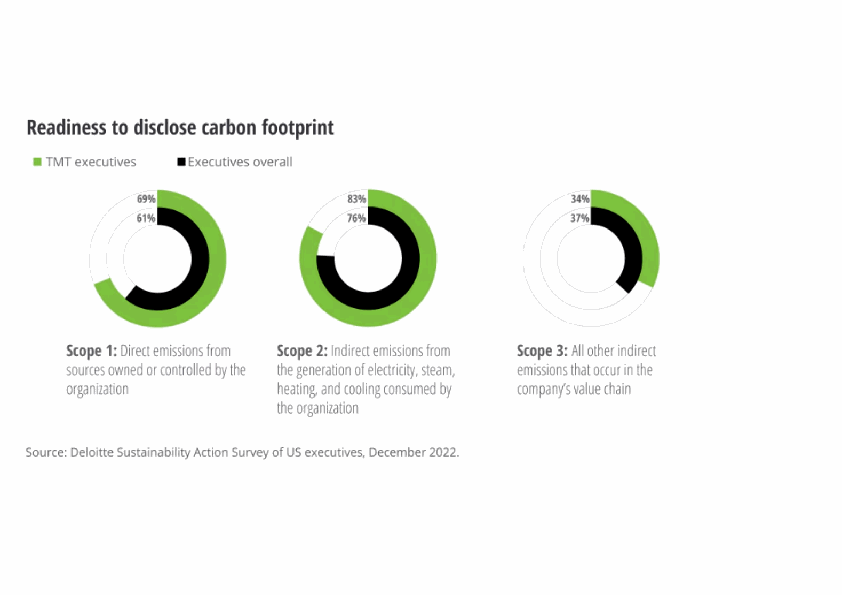

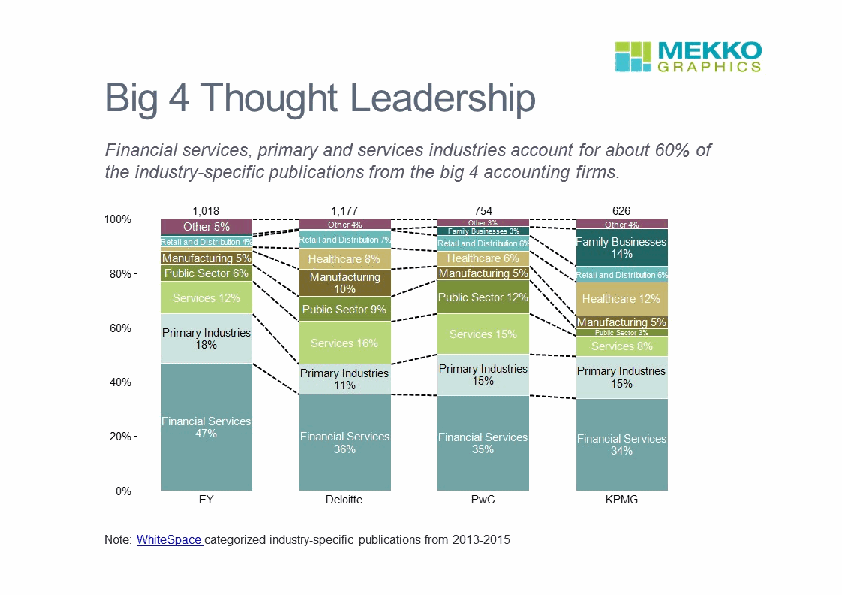

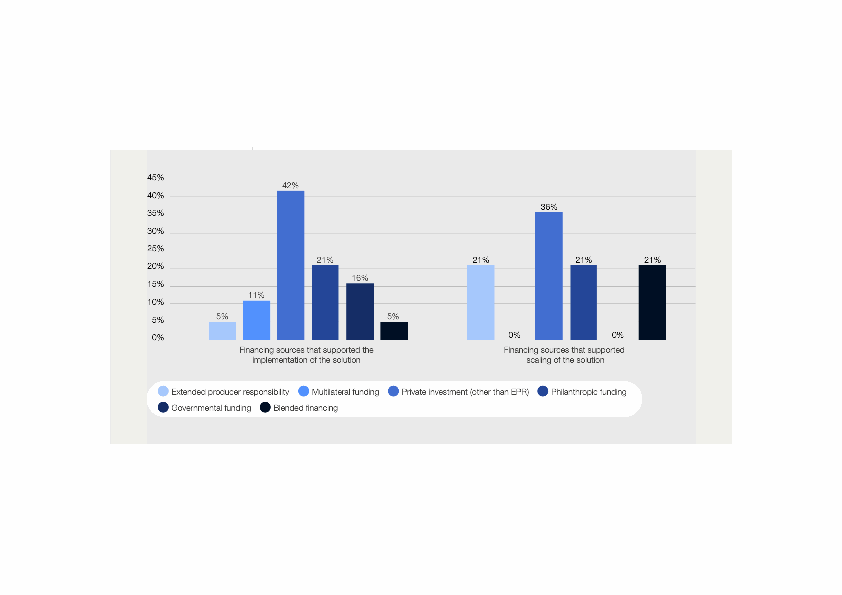

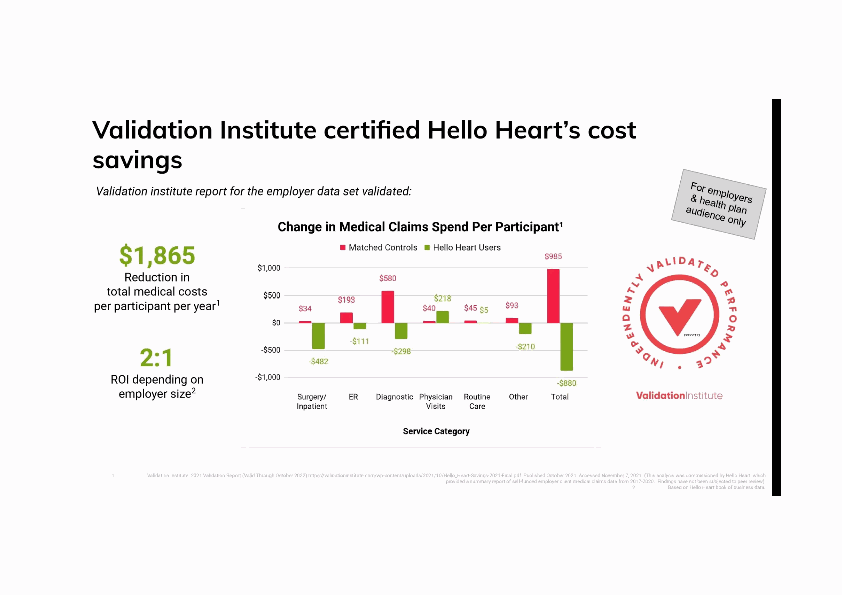

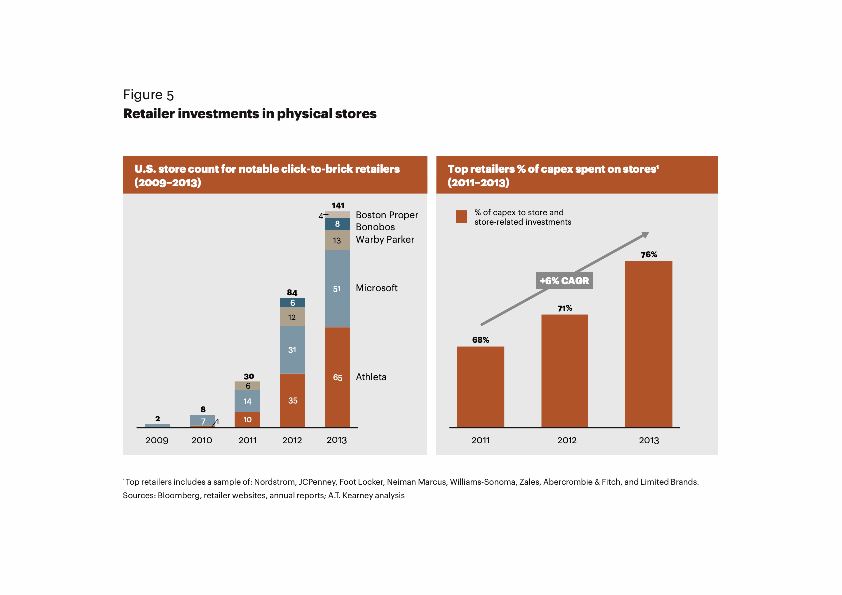

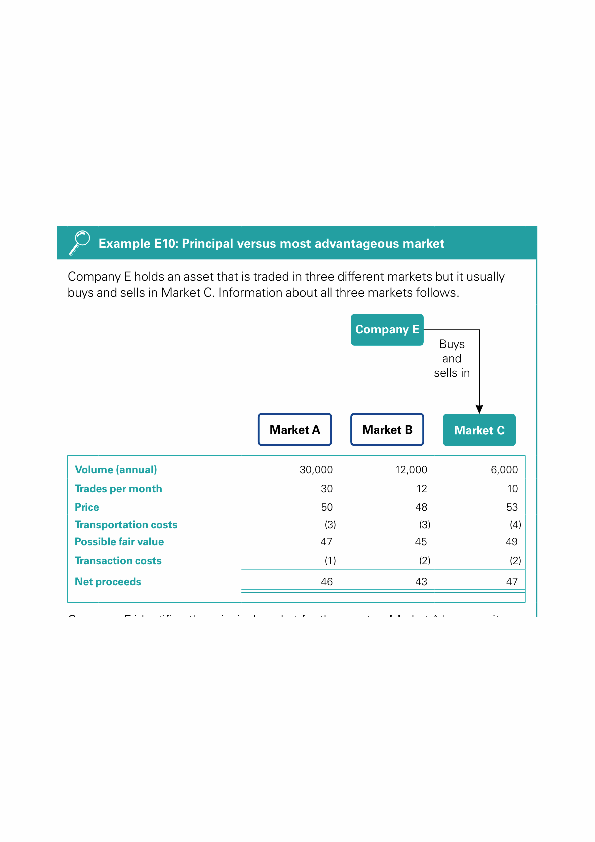

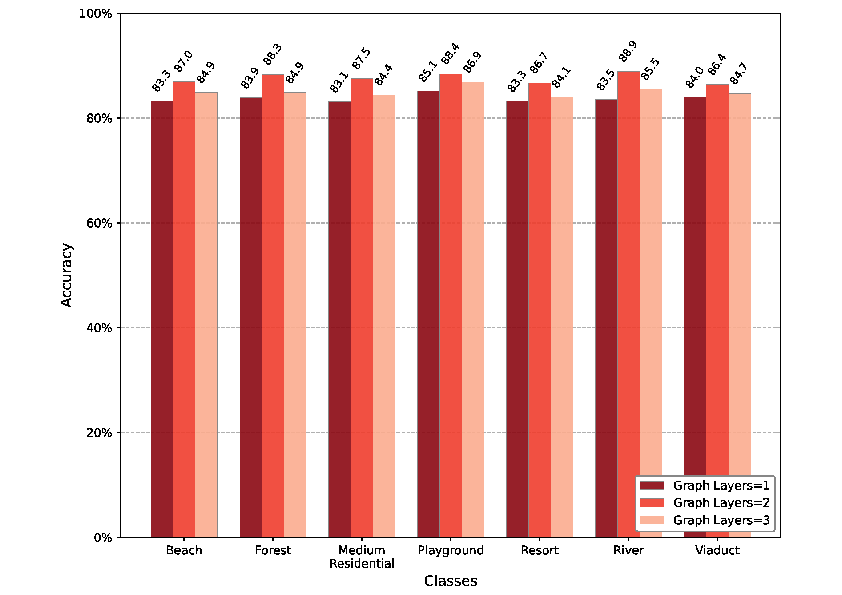

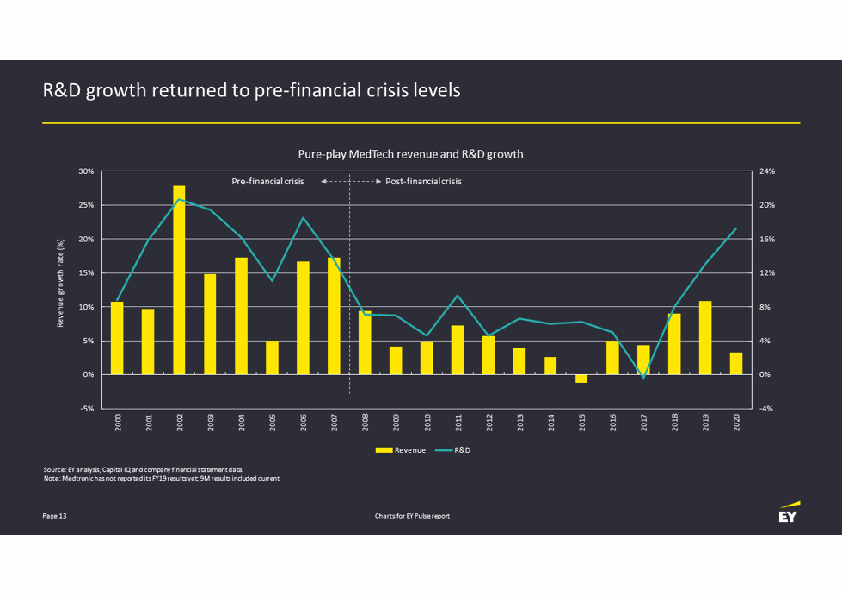

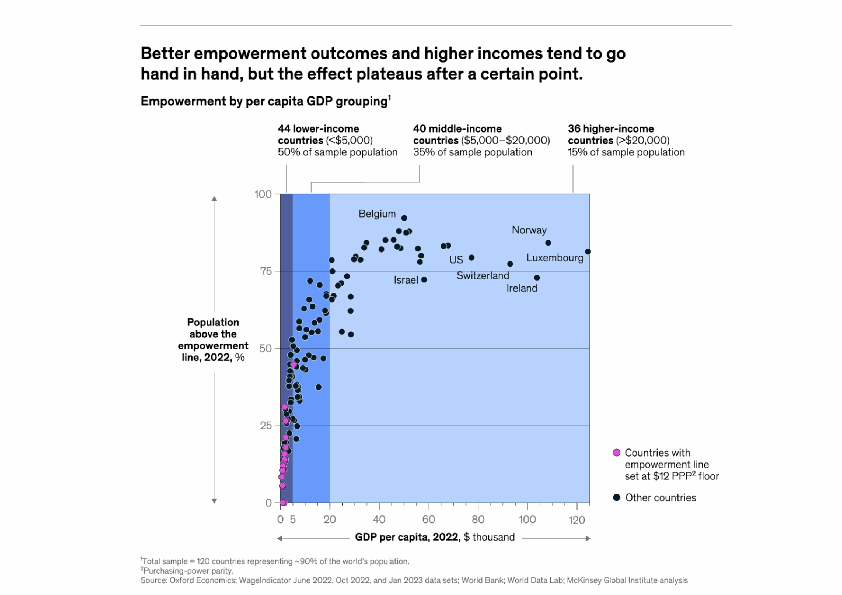

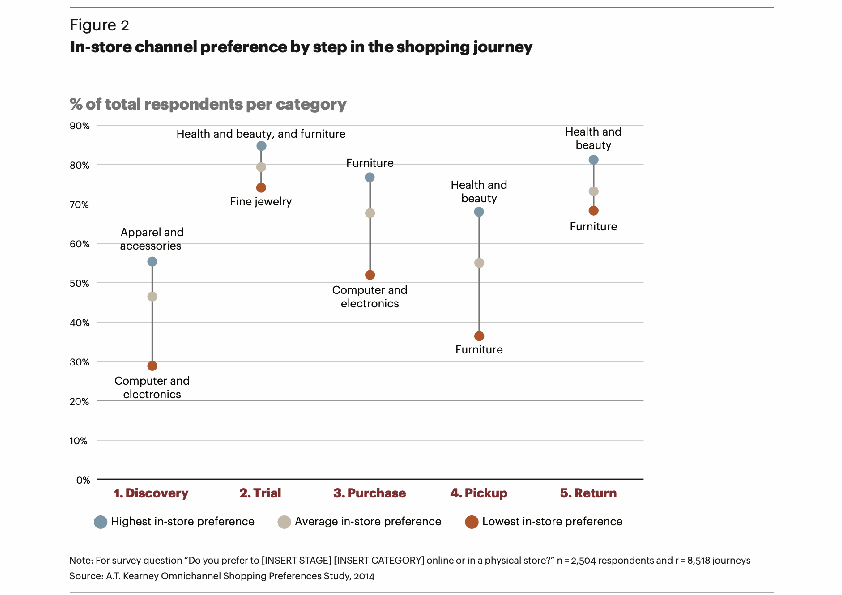

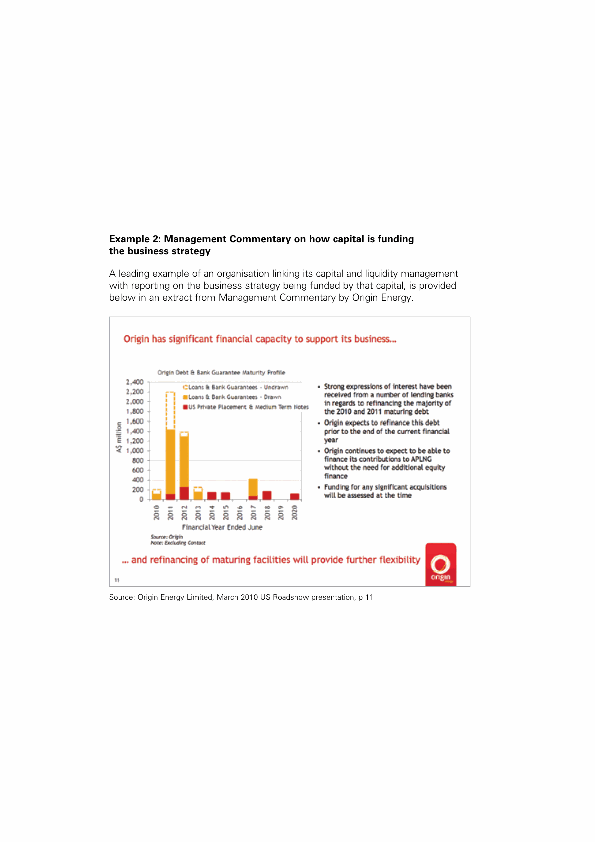

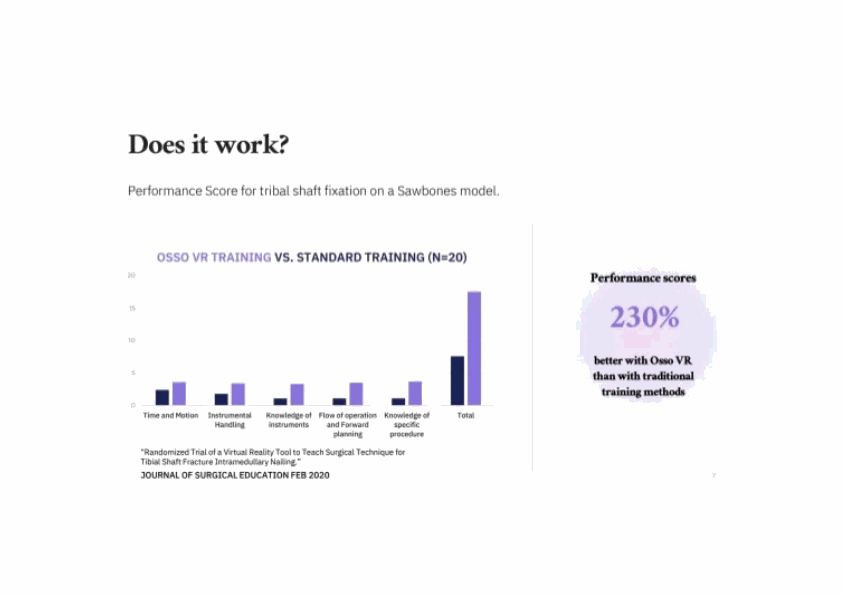

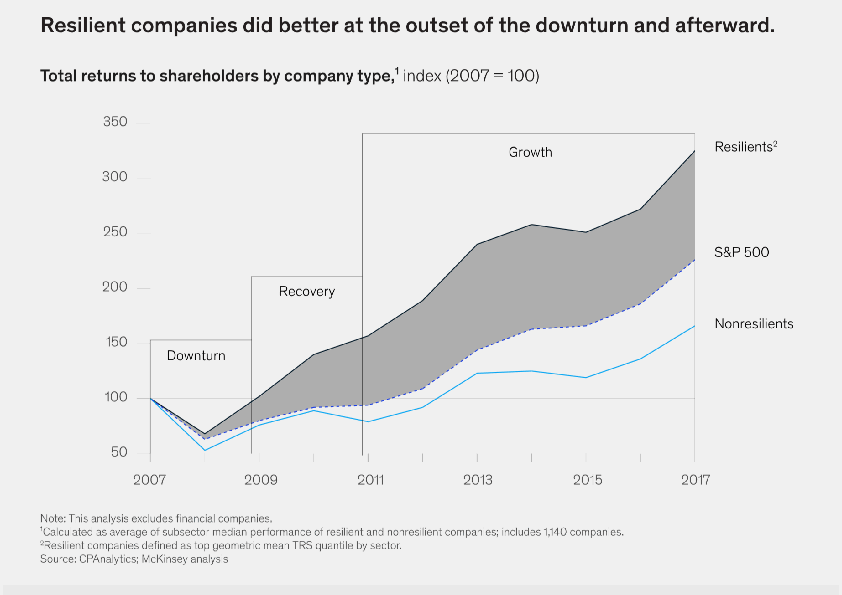

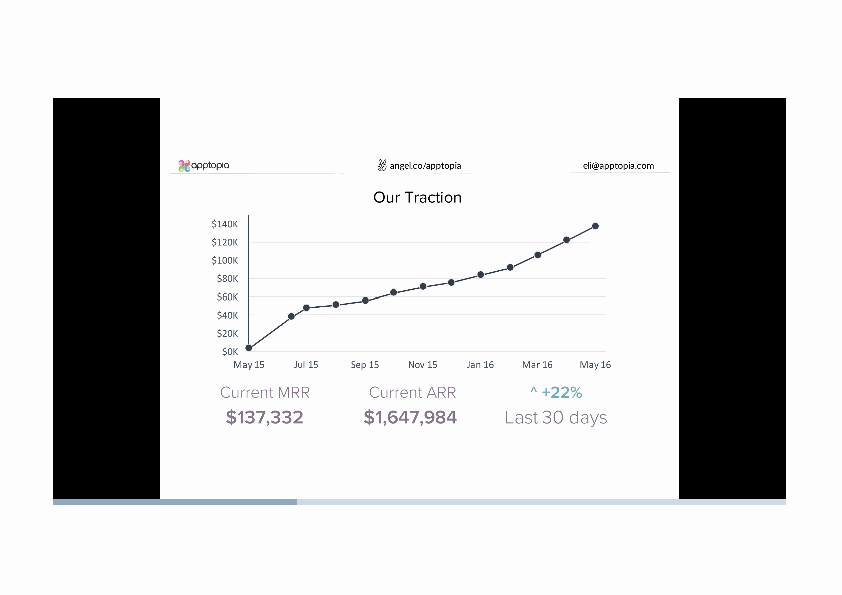

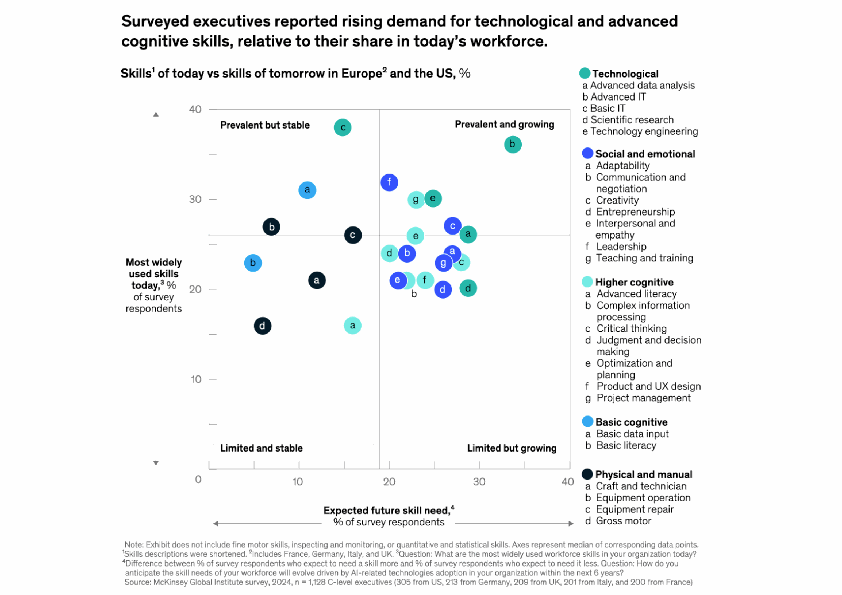

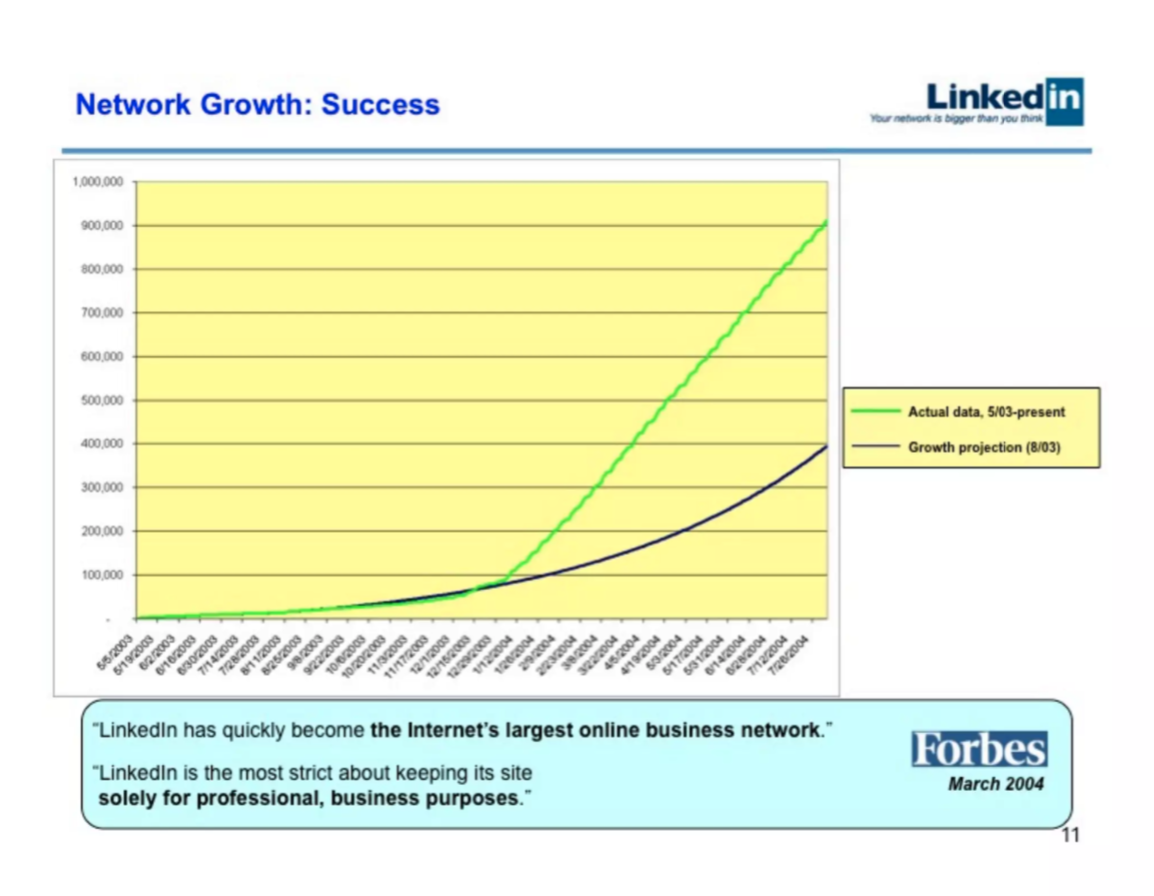

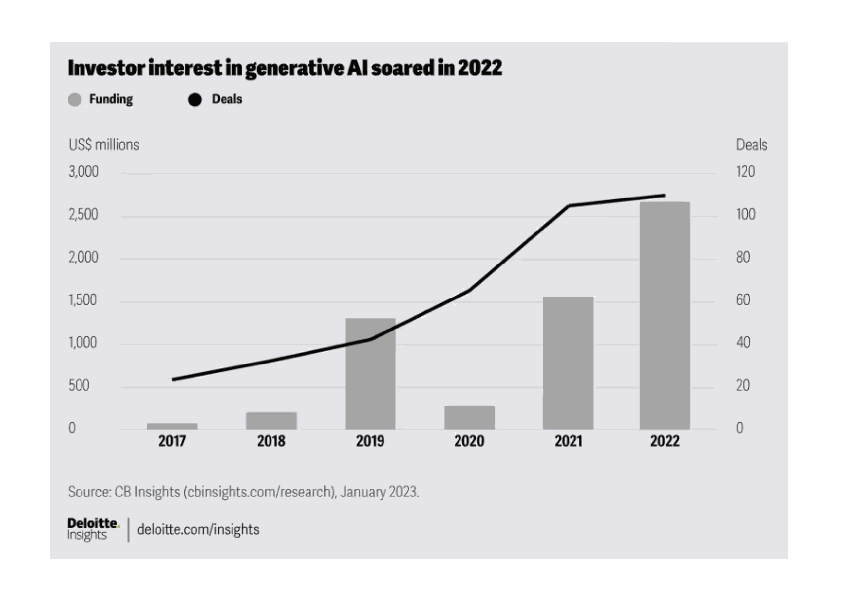

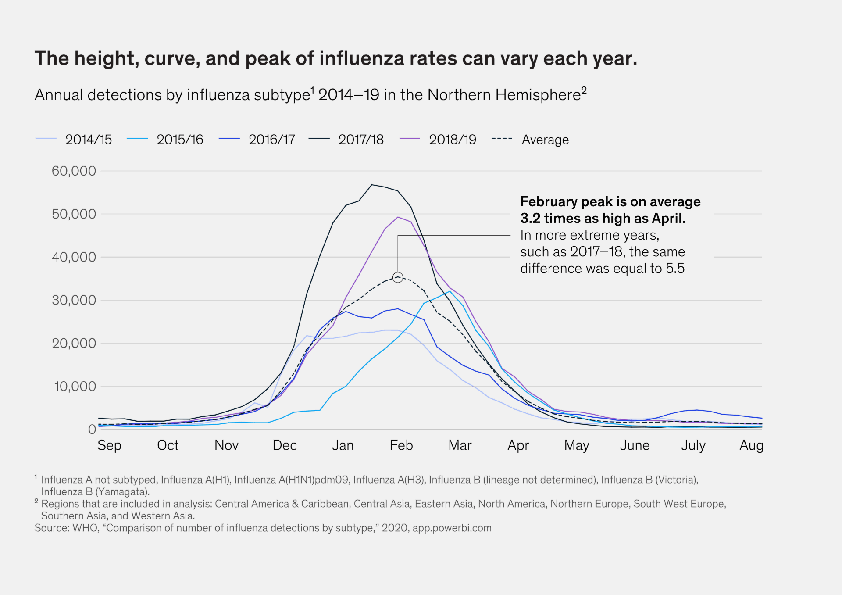

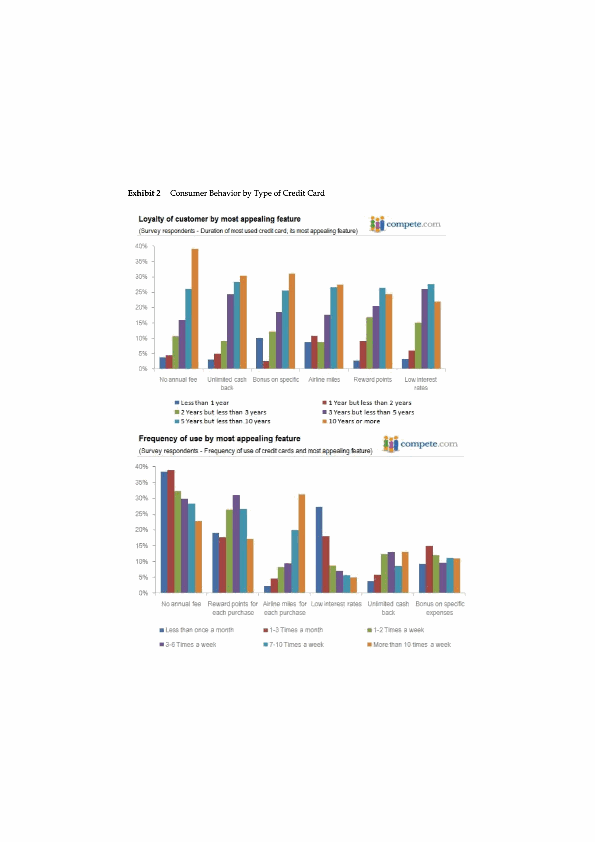

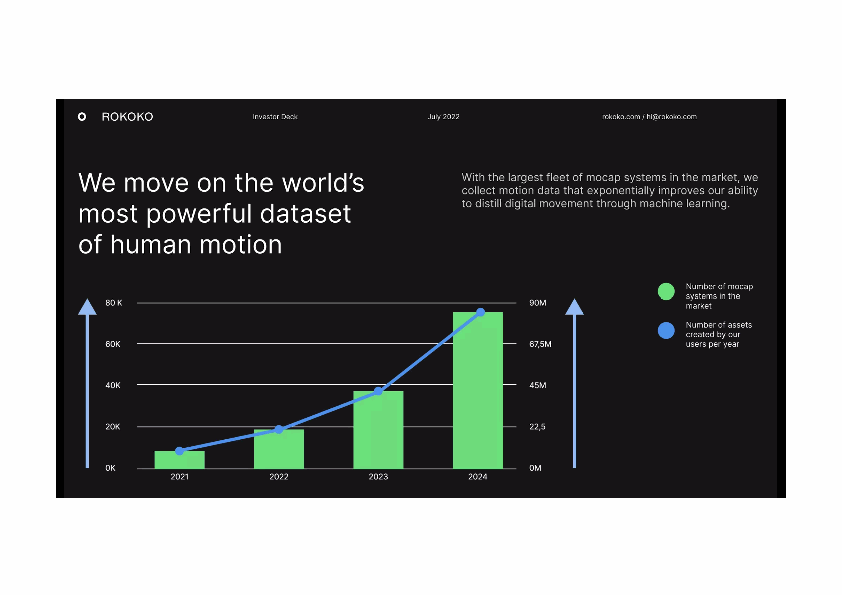

Multimodal models like GPT4o and Gemini Flash are exceptional at inference and summarization tasks, which approach human-level in performance. However, we find that these models underperform compared to humans when asked to do very specific 'reading and estimation' tasks, particularly in the context of visual charts in business decks. This paper evaluates the accuracy of GPT 4o and Gemini Flash-1.5 in answering straightforward questions about data on labeled charts (where data is clearly annotated on the graphs), and unlabeled charts (where data is not clearly annotated and has to be inferred from the X and Y axis).

We conclude that these models aren't currently capable of reading a deck accurately end-to-end if it contains any complex or unlabeled charts. Even if a user created a deck of only labeled charts, the model would only be able to read 7-8 out of 15 labeled charts perfectly end-to-end.