GPT-4o Still Haunted by Hallucinations

We hoped that GPT-4 or 4o would solve hallucinations in RAG systems. Sadly it doesn’t look like that’s the case.

Rob Balian

CTO

The First Step to Solving Hallucinations is Finding Them: Introducing isHallucinated API

Today we’re sharing a super simple API we’ve used to evaluate RAG and Agent systems.

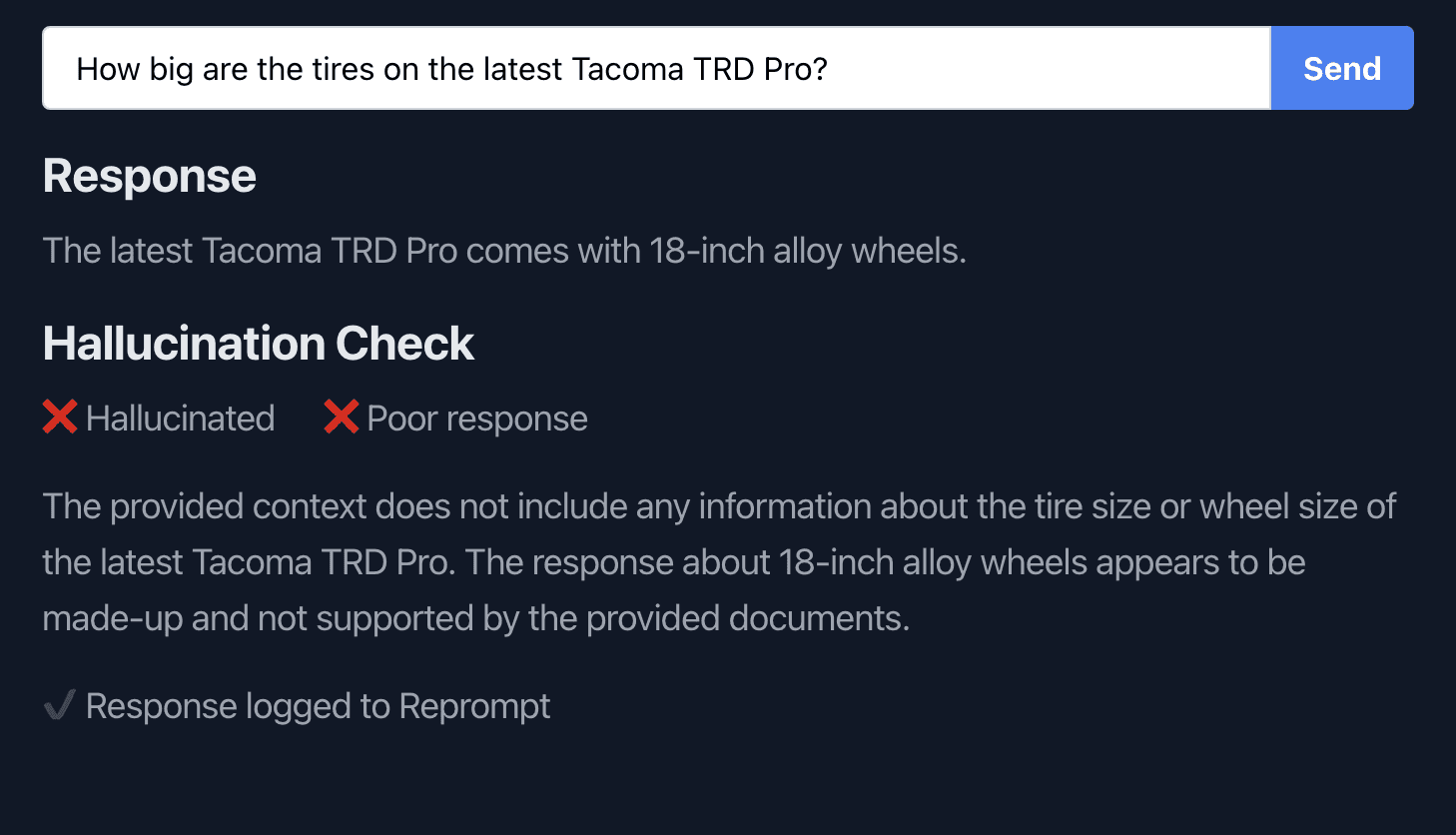

Classic Hallucination on a RAG Chatbot

The API runs a series of checks over the full system prompt (including context, retrievals, or function calls) to find common hallucination scenarios. We also check for poor responses that don't actually answer the user's need.

What we've learned: No one does AI evals because it’s a huge pain. Developers and PMs absolutely hate writing and running manual evals. It feels silly in the age of LLMs. The AI should do this for me! (we actually almost got into a fight with a customer because they wanted us to eval their system but wouldn't tell us what "good" looked like).

isHallucinated() makes it easier to run evals automatically without building an entire eval pipeline, or even making a ground truth dataset. It’s not a full eval solution, and it’s not going to solve your hallucinations (yet) but it’s way easier than building an eval pipeline, even with eval tools.

isHallucinated

isHallucinated: The response uses information outside of the input context or mis-interprets the input context

isBadResponse: The response doesn’t answer the user’s query or is a non-answer

Hope this helps make evaling or debugging just a bit easier. Grab a free API key at repromptai.com or put us in touch with your eng team.